SimulateVerify.AI

Description

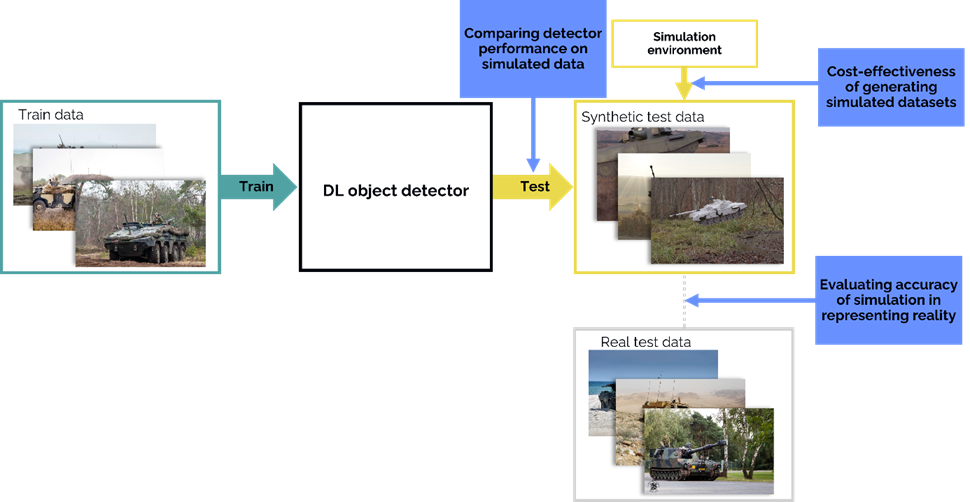

The SimulateVerify.AI project explores how scarcity of large, real-world datasets can be addressed by effectively using simulated data during the testing phase of deep learning models.

Problem Context

Artificial Intelligence (AI)-based computer vision methods are becoming increasingly important for military, automotive, and other high-risk applications. Especially during operational use in a military context, vast amounts of sensor data are coming in, from both manned and unmanned platforms. Handling such a large data volume requires automated analysis methods for autonomous and/or decision-support systems. Deep Learning (DL) has emerged as the most effective methodology for computer vision tasks such as object detection, target identification, and tracking. DL models rely strongly on the availability of large datasets for both training and accurately testing their performance and reliability under diverse operational conditions.

The acquisition of large and varied datasets for military purposes is challenging. The restricted nature of military environments often limits data availability. Secondly, the landscape of military engagement is constantly evolving with new threats, technologies, and tactics. In addition, the models must perform in various environments, terrains, and weather conditions, depending on the operational domain. These factors complicate the gathering and maintenance of datasets that represent the required operational conditions. Moreover, the costs of live exercises, including logistics, equipment, and personnel, can be high and obtaining datasets under the required environmental conditions is challenging.

Solution

The use of simulated data could address these challenges. It offers a cost-effective method to generate large datasets of images, without requiring access to actual operational recordings. Simulation provides full control over object and scene characteristics, allowing for rapid adaptation to new circumstances and exposing DL models to diverse conditions tailored to specific operational requirements. However, for simulated data to function as a virtual proxy for real-world data in the testing process of the DL model, its suitability, appropriateness, and the resulting cost-effectiveness must be evaluated.

Results

We submitted a paper to the conference SPIE Sensors + Imaging (track Artificial Intelligence for Security and Defence applications II), which was presented in Edinburgh, September 2024. In addition, the results were presented at a meeting of the NATO task group SET-343 (Using Simulation to Train AI) to representatives from ~15 different institutes. The paper explores the use of simulated data in the testing phase of AI-models, and methods to evaluate the accuracy of the simulation in representing real-world performance. While the study demonstrates promising results, several areas require further research to enhance the validation and application of simulation. These include 1) the trade-off between efforts invested in simulating challenging conditions and the resulting gained cost-effectiveness of switching to simulated data, 2) specific aspects of the testing phase where simulation could be useful, such as stress-testing, 3) methods to validate the use of simulated data that can be derived from the simulations directly, without relying on real-world datasets.

Contact

- Veronique Marquis, Project Manager, e-mail: veronique.marquis@tno.nl